You are not logged in.

- Topics: Active | Unanswered

#1 2016-08-17 16:17:25

- jcarletto27

- Member

- Registered: 2016-08-10

- Posts: 17

Adventures in Dynamic Cure Times

Hi All,

so far this is the closest I've managed to get a dynamic cure time. As for with most things found in nature, the rate is logarithmic at it's core, so I tried to capture that in the formula. Larger area requires less energy and there for less time, smaller areas more energy so more time.

this is my cure formula for a .04 mm layer height

{(([[LayerNumber]]<6)*12.25)+(([[LayerNumber]]<76)*6.125)+((912/[[LargestArea]])<2)*2+((912/[[LargestArea]])>4.1)*4.1+((912/[[LargestArea]])>2)*((912/[[LargestArea]]))*((912/[[LargestArea]])<4.1)}this gives me a range of cure times with from 2 seconds up to 4.1 for my normal layers, and ~20 seconds for my first 5 layers ~ 8 seconds for the next 70 layers.

the "912" is calculated by measuring minimum cure time for an area. so in my case the area was 456 mm^2 and then multiplying that number by the cure time.

it can really be broken down like this

(Known Cure time*Known Cure Area)/Layer Areathen add in some hard upper and lower boundaries so you don't cure a small area for too long, or a large area for too little.

Note: Make sure Pixel Dimming is off, it kind of works against dynamic cure.

Last edited by jcarletto27 (2016-08-17 16:17:50)

Offline

#2 2016-08-18 19:50:05

- jcarletto27

- Member

- Registered: 2016-08-10

- Posts: 17

Re: Adventures in Dynamic Cure Times

I'm now testing a formula that's based more on the Kudo3d method of cure rate based on layer, not on area.

this is my Dynamic Cure Time formula

{((([[LayerNumber]]<6)*15+(([[LayerNumber]]/[[TotalNumberOfLayers]])<2)*15*([[LayerNumber]]/[[TotalNumberOfLayers]])>0))+

(([[LayerNumber]]/[[TotalNumberOfLayers]])<11)*15*([[LayerNumber]]/[[TotalNumberOfLayers]])>1))+

(([[LayerNumber]]/[[TotalNumberOfLayers]])<26)*4*([[LayerNumber]]/[[TotalNumberOfLayers]])>10))+

(([[LayerNumber]]/[[TotalNumberOfLayers]])<51)*3*([[LayerNumber]]/[[TotalNumberOfLayers]])>25))+

(([[LayerNumber]]/[[TotalNumberOfLayers]])<101)*2*([[LayerNumber]]/[[TotalNumberOfLayers]])>50)))}the first 5 layers, and any layers less than 2% of total layers get the burn in cure time of 15+15 seconds. then 1% to 10% get a 15 second time, then 11% to 25% get a 4, 26% to 50% get 3 and the last half gets my normal cure rate of 2 seconds.

this along with a dynamic lift rate formula make for some decent results.

G1 Z{[[LayerPosition]]+[[ZLiftDistance]]}

F{((([[LayerNumber]]/[[TotalNumberOfLayers]])>0)*25)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])>10)*5)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])>20)*10)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])>30)*15)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])>40)*10)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])>50)*10)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])>60)*10)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])>70)*10)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])>80)*10))};or alternatively something like this would function similarly.

{((([[LayerNumber]]/[[TotalNumberOfLayers]])<101)*2)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])<75)*1)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])<50)*2)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])<25)*5)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])<10)*10)+

(([[LayerNumber]]/[[TotalNumberOfLayers]])<2)*30))}Last edited by jcarletto27 (2016-08-18 20:16:21)

Offline

#3 2016-09-27 03:57:06

- backXslash

- Member

- Registered: 2016-03-25

- Posts: 151

Re: Adventures in Dynamic Cure Times

@jcarletto27

Any chance I could enlist your help figuring out a dynamic cure formula? Or could you post a guide on understanding it? I'm having some real trouble wrapping my head around it, but I already made a thread about it here.

I've got a print I need to get to come out for a customer, so I'm stressing about it a bit. Any help I can get is much appreciated.

Offline

#4 2016-09-27 12:38:31

- jcarletto27

- Member

- Registered: 2016-08-10

- Posts: 17

Re: Adventures in Dynamic Cure Times

So, it must be said, that while my formula works for ~80% of my prints, it can sometimes under cure the support structure. so if your prints have a support structure with hundreds of tiny dots and one huge [LargestArea] it's not going to be as useful. and for that I would think just using the Pixel dimming feature might be better, but I've never managed to get a decent print while using it.

Ideally, if we could load up the model file or slices, and then load up the support structure model or slices and then print both of them with alternating times, similar to how the calibration feature works that would give us the best of both worlds: Higher curing times for the small pin prick supports that don't need detail, and a slightly under cured model to preserve the most detail.

but, if your interested in my cure formulas, I whipped up a google spreadsheet with everything in it. Only change data in the blue cells.

https://drive.google.com/open?id=1OMntn … nJwcsa0btY

the process is fairly simple to determining your magic number. Print a cube or other object with a singular, known layer size(you can even use the calibration function), find the minimum cure time for that layer size and input it and the [LayerArea] or [LargestArea] into the spreadsheet. (I find using [LargestArea] makes more sense)

once thats worked out, you can just copy the formula to the right and paste it right into the Dynamic Cure time in your profile.

Note: LayerArea and LargestArea are calculated when the layers get processed by Nanodlp, because of that, the 2 functions are only available for use when you upload your model as a STL or a slice file.

Last edited by jcarletto27 (2016-09-27 12:38:47)

Offline

#5 2016-09-27 15:16:31

- backXslash

- Member

- Registered: 2016-03-25

- Posts: 151

Re: Adventures in Dynamic Cure Times

You sir, are a god. I'll be erecting shrines and idols in your honor.

Offline

#6 2016-09-27 15:37:20

- jcarletto27

- Member

- Registered: 2016-08-10

- Posts: 17

Re: Adventures in Dynamic Cure Times

Let me know how your results turn out, I'm happy someone else was able to get some use from my research.

Offline

#7 2016-10-05 03:55:46

- backXslash

- Member

- Registered: 2016-03-25

- Posts: 151

Re: Adventures in Dynamic Cure Times

Let me know how your results turn out, I'm happy someone else was able to get some use from my research.

Apparently I did something wrong, cause NanoDLP has decided that the formula isn't valid. It glitched out, and basically decided to cure each layer for the layer number, in seconds. (i.e. the 512th layer is cured for 512 seconds, the 600th layer is cured for 600 seconds).

The formula your spreadsheet kicked out is:

{(([[LayerNumber]]<10)*90)+(([[LayerNumber]]<104)*45)+((2/[[LargestArea]])<9)*9+((2/[[LargestArea]])>16.2)*16.2+((2/[[LargestArea]])>9)*((2/[[LargestArea]]))*((2/[[LargestArea]])<16.2)}Offline

#8 2016-10-05 06:56:25

- Shahin

- Administrator

- Registered: 2016-02-17

- Posts: 3,556

Re: Adventures in Dynamic Cure Times

Check out the dashboard, the most probably you have issue on your file. So [[LargestArea]] is 0, so you will have invalid expression. (2/[[LargestArea]] => 2/0)

I would suggest handle [[LargestArea]] with more care. (([[LargestArea]]<1)*0+1))

Offline

#9 2016-10-05 11:12:22

- backXslash

- Member

- Registered: 2016-03-25

- Posts: 151

Re: Adventures in Dynamic Cure Times

Check out the dashboard, the most probably you have issue on your file. So [[LargestArea]] is 0, so you will have invalid expression. (2/[[LargestArea]] => 2/0)

I would suggest handle [[LargestArea]] with more care. (([[LargestArea]]<1)*0+1))

As I said before, the notation you came up with just completely eludes me for some reason. I'll confess, I copied and pasted directly out of the spreadsheet linked above, per the instructions there.

To clarify, the expression is returning zero somewhere? And error in my file? My workflow is to create a model in Rhino, export a .ply to Meshmixer to add support, export an STL to Asiga Stomp for slicing, then manually run through the SLC file before allowing it to be automatically checked in Sleece. If the SLC came out right, I'll upload that to NanoDLP. A problem with my file would imply NanoDLP errored somewhere during the conversion process, or I missed something completely when checking the file in Sleece.

Or did I misinterpret the whole statement?

{Sorry if that's long winded or nit-picky, you wrote the software and I'd like to learn. ![]() }

}

Last edited by backXslash (2016-10-05 11:14:15)

Offline

#10 2016-10-05 21:22:54

- Shahin

- Administrator

- Registered: 2016-02-17

- Posts: 3,556

Re: Adventures in Dynamic Cure Times

Sorry my last message was not clear enough.

For some reason slicer has been recorded [[LargestArea]] value as zero for your SLC file. As you have division by [[LargestArea]] in your formula. It returns wrong value.

Offline

#11 2016-10-10 21:15:20

- backXslash

- Member

- Registered: 2016-03-25

- Posts: 151

Re: Adventures in Dynamic Cure Times

Sorry my last message was not clear enough.

For some reason slicer has been recorded [[LargestArea]] value as zero for your SLC file. As you have division by [[LargestArea]] in your formula. It returns wrong value.

Shahin -

jcarletto27 and I have been working on some dynamic cure stuff via email. It seems to me from our experiments that it may be beneficial to have a few extra keywords in regards to the layer areas, such as smallest area, or average area.

I'm not sure of exactly how it would work, but I'm thinking with the right processing and cure formula, we may be able to implement truly dynamic cures kind of like how the resin test pillars work. The idea would be to maybe sort pieces of the current slice into categories, [small] [medium] and [large] I'm sure would be fine and then specify known good cure times for those ranges of sizes. The printer could then display the entire slice for the duration specified by [small], turn off the [large] category first, then the [medium], then the [small], then move to the next layer.

Is that workable? Or am I dreaming?

Offline

#12 2016-10-11 07:31:47

- Shahin

- Administrator

- Registered: 2016-02-17

- Posts: 3,556

Re: Adventures in Dynamic Cure Times

It is workable (albeit with small delays) but require lots of development and will add very heavy load on render engine. Are you sure this is not going to have same effect as dimming?

Closest thing which we could have without extensive development and other negative effects is to have smallest area and total area count. Maybe by using smallest and largest area and total number of areas you could choose suitable cure time.

Offline

#13 2016-10-11 13:15:54

- backXslash

- Member

- Registered: 2016-03-25

- Posts: 151

Re: Adventures in Dynamic Cure Times

It is workable (albeit with small delays) but require lots of development and will add very heavy load on render engine. Are you sure this is not going to have same effect as dimming?

Closest thing which we could have without extensive development and other negative effects is to have smallest area and total area count. Maybe by using smallest and largest area and total number of areas you could choose suitable cure time.

Worth a shot, for sure! Can you add those keywords?

Offline

#14 2016-10-11 16:32:00

- Shahin

- Administrator

- Registered: 2016-02-17

- Posts: 3,556

Re: Adventures in Dynamic Cure Times

Sure, you can try them on the beta version

Offline

#15 2017-05-18 07:15:47

- 1125lbs

- Member

- Registered: 2017-03-15

- Posts: 81

Re: Adventures in Dynamic Cure Times

Shahin wrote:Sorry my last message was not clear enough.

For some reason slicer has been recorded [[LargestArea]] value as zero for your SLC file. As you have division by [[LargestArea]] in your formula. It returns wrong value.

Shahin -

jcarletto27 and I have been working on some dynamic cure stuff via email. It seems to me from our experiments that it may be beneficial to have a few extra keywords in regards to the layer areas, such as smallest area, or average area.

I'm not sure of exactly how it would work, but I'm thinking with the right processing and cure formula, we may be able to implement truly dynamic cures kind of like how the resin test pillars work. The idea would be to maybe sort pieces of the current slice into categories, [small] [medium] and [large] I'm sure would be fine and then specify known good cure times for those ranges of sizes. The printer could then display the entire slice for the duration specified by [small], turn off the [large] category first, then the [medium], then the [small], then move to the next layer.

Is that workable? Or am I dreaming?

it would be a dream! im trying to print a complex very tiny part with capilar structures and some restriction from part, i have found the same method would work for me as Smallest area = threshold of critical smallest suport area, then cure exposition riseup smallest area increase,

Last edited by 1125lbs (2017-05-18 07:16:28)

Offline

#16 2017-05-18 07:19:06

- 1125lbs

- Member

- Registered: 2017-03-15

- Posts: 81

Re: Adventures in Dynamic Cure Times

I have an issue with how to write the formulas for this especific part, i divided the printing process on 5 steps, i need to adjust the formulas, our fellows could help me with some example? thanks

Offline

#17 2017-05-18 12:06:02

- jcarletto27

- Member

- Registered: 2016-08-10

- Posts: 17

Re: Adventures in Dynamic Cure Times

I have an issue with how to write the formulas for this especific part, i divided the printing process on 5 steps, i need to adjust the formulas, our fellows could help me with some example? thanks

what are you going to base splits on? layer position, current height, LargestArea, SmallestArea, something else?

I can write out a couple of templates if you give me an idea of how you want it broken up.

Offline

#18 2017-05-18 12:29:04

- jcarletto27

- Member

- Registered: 2016-08-10

- Posts: 17

Re: Adventures in Dynamic Cure Times

If your curious, this is the formula I've settled on for most of my printing lately.

FunToDo Deep Black Resin

50 micron z

65 micron xy

without projector bulb compensation (~60% life left)

{(([[LayerNumber]]<=3)*45)+(ceil(2*(-1.345 * log([[TotalSolidArea]]) + 15.5))/2)}with projector bulb compensation

{(([[LayerNumber]]<=3)*45)+(ceil(2*(-1.345 * log([[TotalSolidArea]]) + 13))/2)}Offline

#19 2017-05-18 18:22:44

- 1125lbs

- Member

- Registered: 2017-03-15

- Posts: 81

Re: Adventures in Dynamic Cure Times

1125lbs wrote:I have an issue with how to write the formulas for this especific part, i divided the printing process on 5 steps, i need to adjust the formulas, our fellows could help me with some example? thanks

what are you going to base splits on? layer position, current height, LargestArea, SmallestArea, something else?

I can write out a couple of templates if you give me an idea of how you want it broken up.

Hello jcarletto27,

A client came to me with a model which is has very tiny features, the model s 5mm tall with inner mechanisms, so i have already positioned in every config possible with no success,

im running experiements with transparent viscous material, and i believe overcure is the key...

let me try to explain in simple way

from layer 0 - 21 still ok same thick or [TotalSolidArea]

from layer 23-35 the suports features start to get sharp, so [smallest_area] decrease <<< need to rise exposure time as Smallest area decrease at this point

from layer 35-37 parts start to be drawed but still thin points, the part geomeri has a dome base so in center still some suports, which i consider by inspection after slice the " Critical Smallest Area " until here.

from layer 37-... i would like to calc exposition based on largest Area or pixel diff. ( i will trig the pixel dimming at this point )

any example just to mind me how to handle the functions inside the nanodlp would be helpfull, im have difficult how to deal with arguments and mensages,

Let me clear, i see on layer preview all features as Area count, total solid area, largest area. smallest... its very cleaver and extremely necessary to define a strategy used, like preserve xy projection or maintain are for support how compensate thing to became printable when working with very thin details stuff

im running with pi3 witth direct control mode

best regards

Offline

#20 2017-05-18 19:10:34

- jcarletto27

- Member

- Registered: 2016-08-10

- Posts: 17

Re: Adventures in Dynamic Cure Times

you can try using this template, substituting your own values for the place holders. This should be a fair starting point and replicates a similar function to how Kudo3Ds determines cure time by layer height.

{(

(([[LayerNumber]]<=21)*Burn_in_Cure_Time)+

(([[LayerNumber]]>=22)*Support_Cure_Time*([[LayerNumber]]<=35))+

(([[LayerNumber]]>=36)*Detail_Point_Cure_Time*([[LayerNumber]]<=40))+

(([[LayerNumber]]>=41)*Layer_Cure_Time)

)}if you want to use a formula for calculating who long you should cure based on area you'll need to do some experimentation.

first check to see how long it takes to cure a small area (5 mm x 5 mm for example) then check to see how long it takes to cure a much larger area ( 15 x 15, maybe).

if you can provide me with observed cure times and size of cured slices I can whip up a formula for you like I showed above.

Offline

#21 2017-05-18 23:36:04

- 1125lbs

- Member

- Registered: 2017-03-15

- Posts: 81

Re: Adventures in Dynamic Cure Times

you can try using this template, substituting your own values for the place holders. This should be a fair starting point and replicates a similar function to how Kudo3Ds determines cure time by layer height.

{( (([[LayerNumber]]<=21)*Burn_in_Cure_Time)+ (([[LayerNumber]]>=22)*Support_Cure_Time*([[LayerNumber]]<=35))+ (([[LayerNumber]]>=36)*Detail_Point_Cure_Time*([[LayerNumber]]<=40))+ (([[LayerNumber]]>=41)*Layer_Cure_Time) )}if you want to use a formula for calculating who long you should cure based on area you'll need to do some experimentation.

first check to see how long it takes to cure a small area (5 mm x 5 mm for example) then check to see how long it takes to cure a much larger area ( 15 x 15, maybe).

if you can provide me with observed cure times and size of cured slices I can whip up a formula for you like I showed above.

Im working with values around 1sec exposure at 25µm i even try to reduce the layers to 10-5µ with shorter exposition time to prevent overcure and adj. the grayscale, i will perfom another test table to be more assertive with values envolved.

as we know the cure depth formula, Cd(curedepth) = Dp(depth penetration) * ln E(lamp or average exposure dosed by source lamp led) / Ec(critical exposue)

as Dp and Ec are constants defined by Resin formulation kinectics,

I spent months adjusting concentration of reactive diluents and initiators/ inibitors trying to adjust resolution in resin, working with very viscous transparent reesin seems to be more challenge than i thought

i experiece overcure with this stuff

But i belive this formulas will ramp up the issues envolved, i will perform th test and came back to tell waht i got.

Thank you guys it will help me a lot.

Offline

#22 2017-05-19 08:54:50

- 1125lbs

- Member

- Registered: 2017-03-15

- Posts: 81

Re: Adventures in Dynamic Cure Times

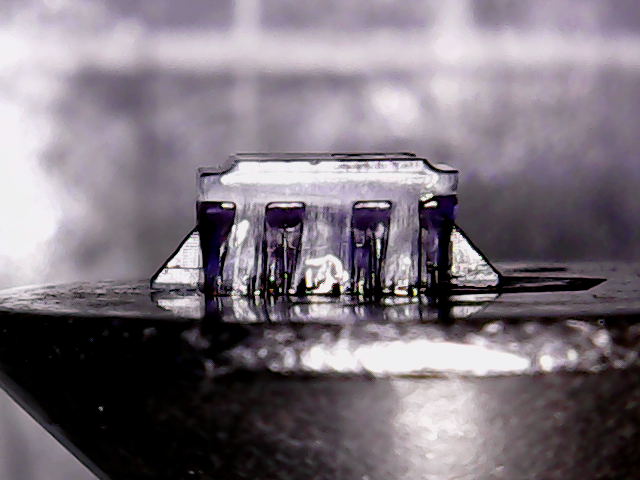

first test

section 1 ,2 ,3

burn in , suport', suport", and the peak

burn in 100µ

suport 25µ

Last edited by 1125lbs (2017-05-19 08:56:03)

Offline

#23 2017-05-19 10:06:31

- 1125lbs

- Member

- Registered: 2017-03-15

- Posts: 81

Re: Adventures in Dynamic Cure Times

Overcure !!!

Offline

#24 2017-05-19 10:11:50

- 1125lbs

- Member

- Registered: 2017-03-15

- Posts: 81

Re: Adventures in Dynamic Cure Times

before overcure

Offline

#25 2017-05-19 12:42:13

- jcarletto27

- Member

- Registered: 2016-08-10

- Posts: 17

Re: Adventures in Dynamic Cure Times

What size model is that? It appears that there's a large flat surface that you're trying to print and those are notoriously difficult with Bottom up printers. Have you considered using incredibly slow lift speeds for that section? something like 1mm per minute might leave more detail, if you're noticing it's separating.

Just note that you wont see any detail smaller than your XY res. consider it like this

Offline